If generative AI feels like a creative superpower, copyright law can feel like the kryptonite. The rules were written for human hands, not hyper-scale models trained on oceans of data. You are not imagining the gray areas—they are real, and they matter for anyone publishing, selling, or relying on AI-assisted work.

The good news: you do not need to be a lawyer to make better decisions. With a few practical guardrails and a working map of the legal terrain, you can ship work you are proud of, reduce risk, and stay ready as the rules evolve.

This article breaks down the core issues—training data, authorship, ownership of outputs—and turns them into everyday practices for creators, teams, and leaders. You will also see how popular tools like ChatGPT, Claude, and Gemini fit into the picture.

The big question: who owns AI-assisted work?

Copyright protects original works of authorship fixed in a tangible medium. That classic definition hides a critical word: authorship. In the U.S., the Copyright Office has said that purely machine-generated content is not protected because there is no human author. But that does not mean AI is a dead end for copyright.

Think of AI like a camera on steroids. If you compose the shot—prompting carefully, curating iterations, editing heavily—your human contribution can rise to the level of authorship. If you click a single button and publish the first output, it probably will not. More human direction, transformation, and editing equals more copyright protection for you.

Practical takeaway: document your creative process. Save prompts, drafts, and edits. When you register a work, disclose AI involvement and claim the parts you actually authored. It is boring paperwork—but it is also a shield.

Training data: fair use or free-for-all?

Models learn from vast datasets scraped from the open web, licensed archives, or private corpora. Two legal questions keep popping up:

- Is ingesting copyrighted works for training a fair use (a kind of lawful reuse for purposes like learning, research, or transformation)?

- Do model outputs reproduce protected elements of the works they learned from?

Courts have not given a single definitive answer. Some lawsuits argue training is unauthorized copying; others stress that statistical learning is transformative. A key risk point is memorization—when a model reproduces long, recognizable chunks from its training data.

Here is a simple analogy: reading a thousand novels to learn how to write is fine; photocopying a chapter and selling it is not. If a model sometimes photocopies by accident, providers need to curb that, and users should avoid prompts that invite copying.

For a timely overview of how U.S. regulators are approaching these questions, see the U.S. Copyright Office’s AI Initiative page, which collects current guidance and updates: USCO: Artificial Intelligence Initiative.

Authorship thresholds: how much is enough?

The threshold is not mathematically precise, but a few rules of thumb help:

- Idea vs. expression: You cannot copyright an idea like “a noir detective in space,” but you can copyright your specific expression of it. Prompts that list ideas are not authorship. Your narrative structure, dialogue, and edits are.

- Selection and arrangement matter: Curating AI outputs into a unique collection can be protectable, even if the individual elements are not.

- Substantial human editing is key: If you rewrite, composite, transform, and art-direct, you are adding original expression.

If you are working with images, think in stages: concept prompts, reference boards, roughs, comps, retouching. The more choices you make, the stronger your claim.

Real-world examples you can learn from

- Marketing team scenario: You prompt a model to write a blog post “in the style of” a living author. Risk flags: style mimicry plus named authors can pull outputs too close to a specific creator. Safer approach: describe attributes (“wry, conversational tone; short sentences; three analogies”) and add your unique structure and examples.

- Product design scenario: You ask for a UI identical to a competitor’s app. Risk flags: trade dress and distinctive elements. Safer approach: prompt for functional patterns (“card-based layout for a recipe app; large legible typography; high contrast for accessibility”) and then design original components.

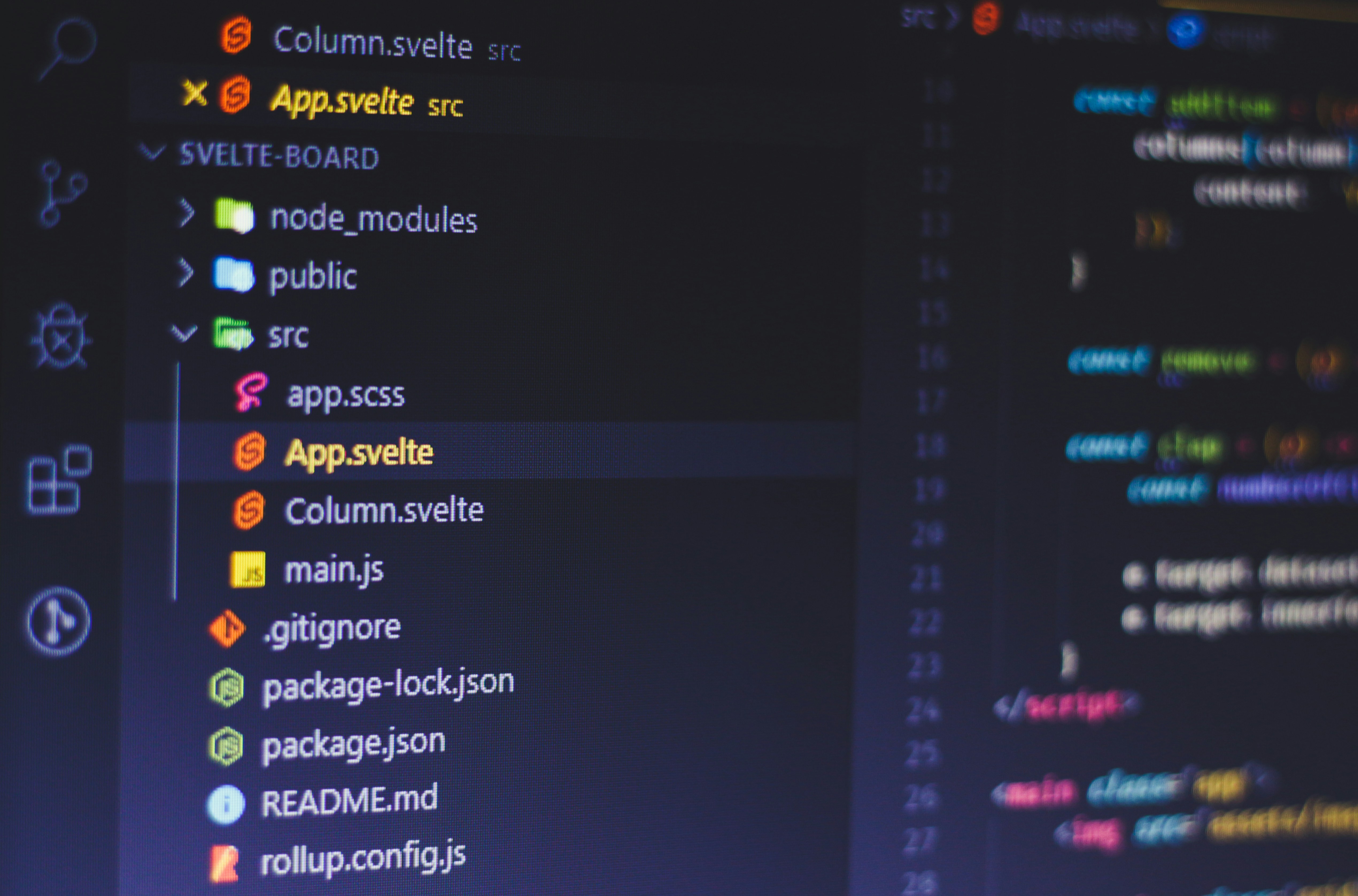

- Code scenario: You accept a model’s code verbatim. Risk flags: potential inclusion of GPL or other licensed snippets. Safer approach: use models for scaffolding, then rewrite, comment, and run a license scanner; keep attribution when required.

Ongoing lawsuits involving news publishers, image libraries, and model providers underscore these patterns: the closer your output gets to a recognizable, specific source, the higher the risk.

What popular tools say—and what that means for you

- ChatGPT (OpenAI), Claude (Anthropic), and Gemini (Google) all publish use policies and offer enterprise features that reduce memorization and block disallowed prompts. Read those pages before you deploy.

- Some vendors (notably OpenAI, Microsoft, and Google in certain enterprise tiers) advertise IP indemnification for qualifying customers. That is helpful, but it is not a blanket pass—you must follow their policies, stay within usage scopes, and it may not cover all claims.

- Providers are rolling out content filters, provenance signals, and watermarks. These are useful but not foolproof. Treat them as speed bumps, not seatbelts.

Bottom line: tool assurances are part of your risk posture, not a substitute for internal practices.

Practical guardrails for creators and teams

Build a simple, repeatable framework. It does not have to be heavy to be effective.

- Set prompt hygiene rules:

- Avoid “in the style of [living creator]” instructions. Use attributes, not names.

- Do not paste proprietary or confidential text into public tools.

- Ask for transformations, not duplication (“summarize and synthesize,” “create an original take”).

- Strengthen human authorship:

- Plan your creative steps in advance and identify where you will add original expression.

- Edit aggressively: structure, voice, examples, and design choices that are unmistakably yours.

- Keep an audit trail: prompts, drafts, edits, timestamps.

- Reduce training-data echoes:

- Use enterprise models with memorization controls and content filters when available.

- Test for lookalikes by running reverse image or text search on key phrases or visuals.

- License smartly:

- Prefer licensed datasets and stock providers that offer AI-safe catalogs when you need source material.

- For music, fonts, and images, check whether your license permits AI-assisted derivatives.

- Review and escalate:

- Flag outputs that feel too close to a known work for a human review.

- Maintain a lightweight IP checklist before publishing.

Governance for leaders: policies that people actually follow

Policies fail when they are vague or too long. Yours should fit on one page and answer three questions: what we will do, what we will not do, and how we decide the edge cases.

- Scope: define approved tools (e.g., ChatGPT Enterprise, Claude for Work, Gemini for Workspace) and where they may be used.

- Prohibitions: no prompts asking for living artists’ styles; no confidential data in public tools; no verbatim code acceptance without review.

- Approvals: a short path for exceptions, with legal or compliance sign-off.

- Documentation: require prompt and edit logs for high-visibility content.

- Training: give teams real examples, not just rules, and refresh quarterly.

Make it easy to comply. Provide templates for prompt briefs, review checklists, and model cards describing what each tool is good for.

Myths to retire so you can move faster

- “If it is AI, it cannot be copyrighted.” False. AI-assisted works can be protected when your human contribution is original and substantial.

- “If it came from a model, I am automatically safe.” False. You are responsible for what you publish, including potential infringements and defamation.

- “Style is always free to copy.” Not exactly. Style per se is not protected, but copying protected expression or producing confusingly similar works can still create legal and reputational risk.

A simple decision tree you can use today

When in doubt, run these quick checks:

- Does this output feel recognizable as a specific source? If yes, iterate again or change direction.

- Did I add original structure, voice, or design? If no, add more human authorship.

- Do I have the rights to any source material I combined? If no, license or replace.

- Would I feel comfortable defending this in public? If no, escalate for review.

Conclusion: create boldly, document clearly, and stay adaptable

The law is evolving, but your workflow can be stable. Treat models as collaborators that need direction, not vending machines. Put human authorship and process documentation at the center, and you will reduce risk while preserving the speed and scope that make AI worth using.

Next steps you can take this week:

- Draft a one-page AI content policy with do/do not lists and an escalation path.

- Set up a simple prompt-to-publication log (a shared folder or tool) for your team.

- Pilot two workflows—one for text with ChatGPT or Claude, one for images with a licensed source—and run them through your new review checklist.

Keep your curiosity high and your paper trail tidy. That combination will carry you through the gray areas with confidence.